The model used in this project was a bi-directional variational autoencoder (VAE) using long-short term memory (LSTM) recurrent neural networks

(RNNs). The input to the network was a series of five-dimensional vectors to represent strokes. After encoding, Gaussian noise and

normalisation is applied to the latent vector to provide a well-formed and regularised latent space. The output of the model was a series of

six-dimensional vectors representing a Gaussian mixture model (GMM) to provide a probability function of the stroke to be made at each point

of the output sequence.

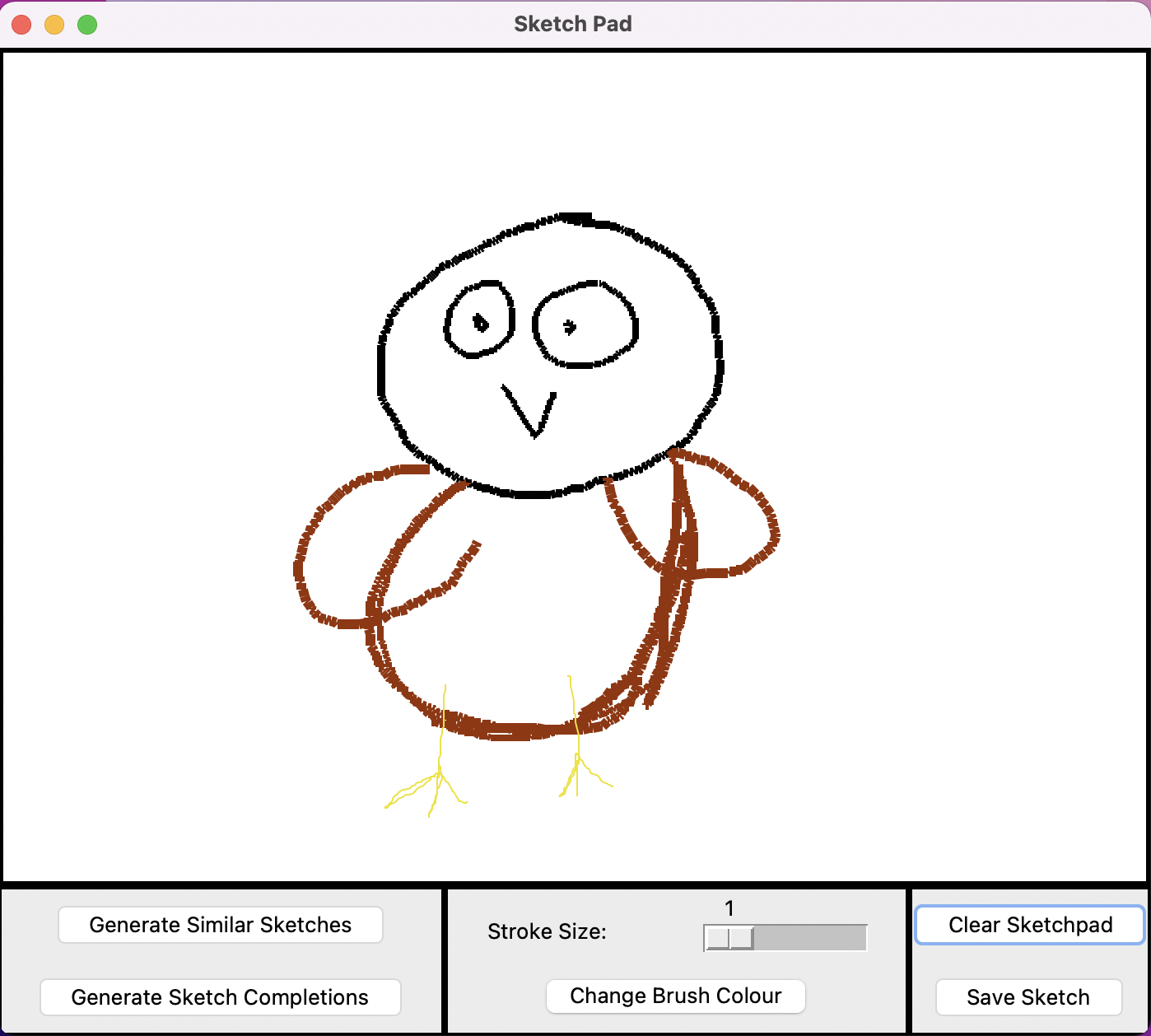

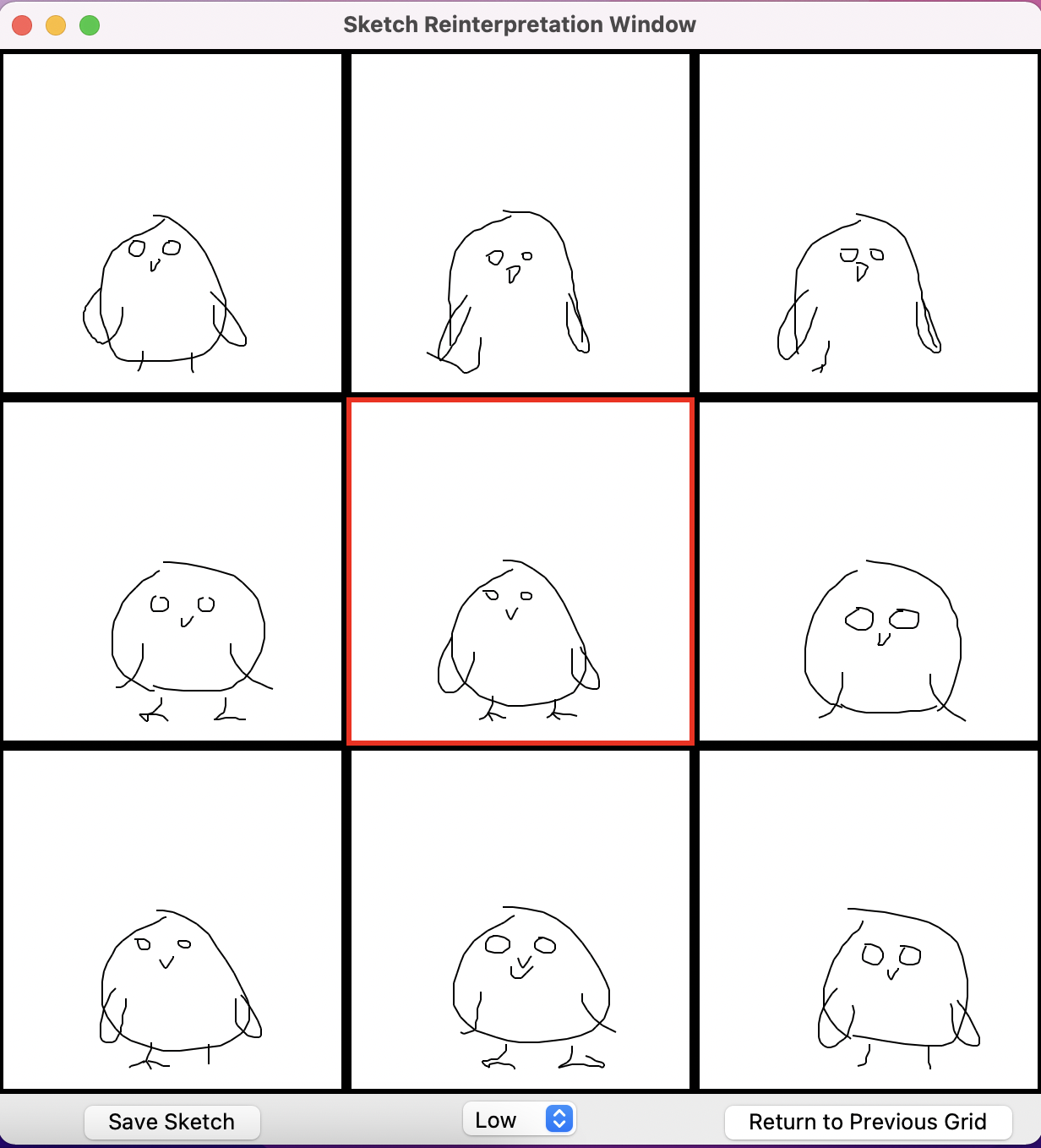

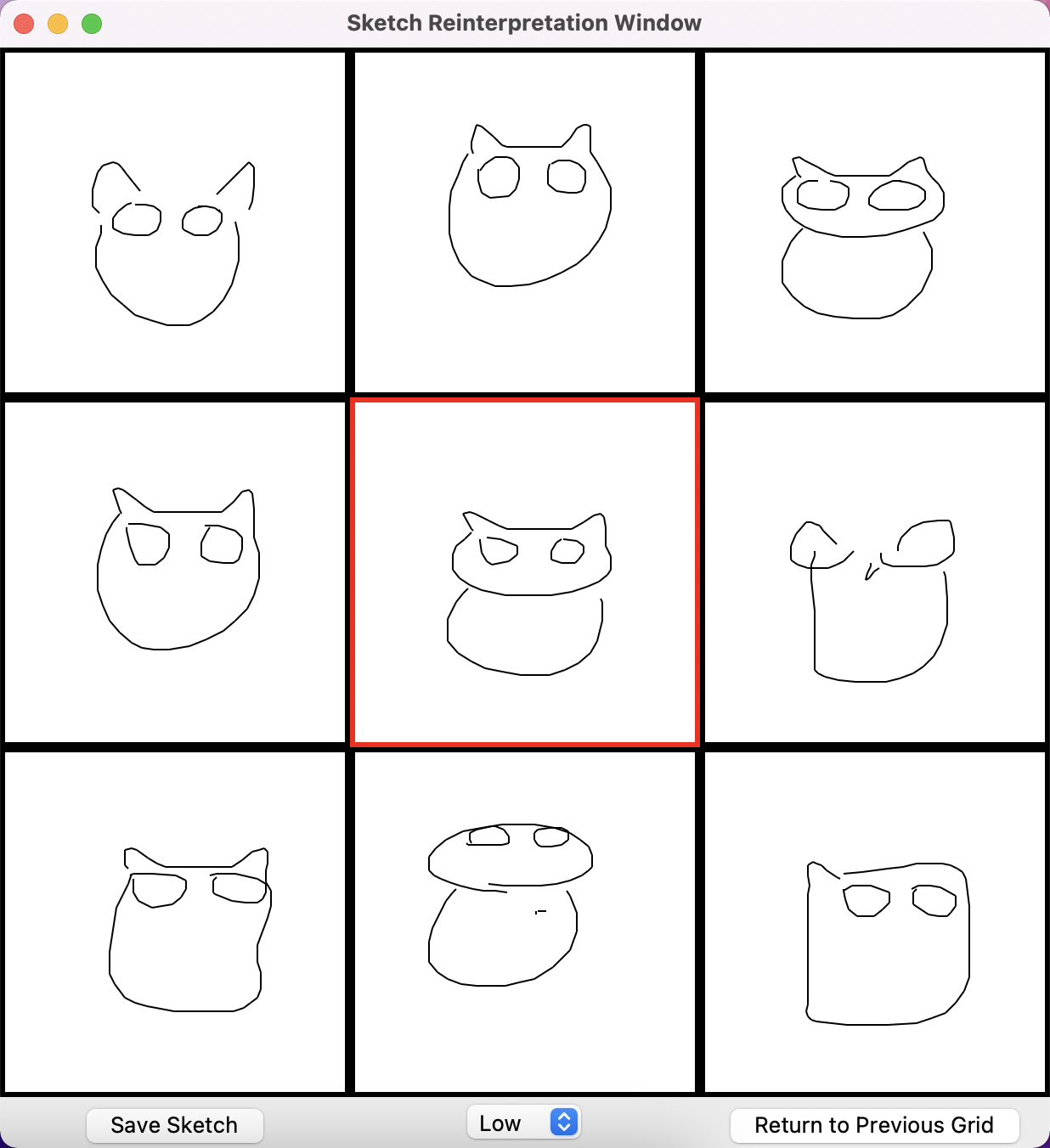

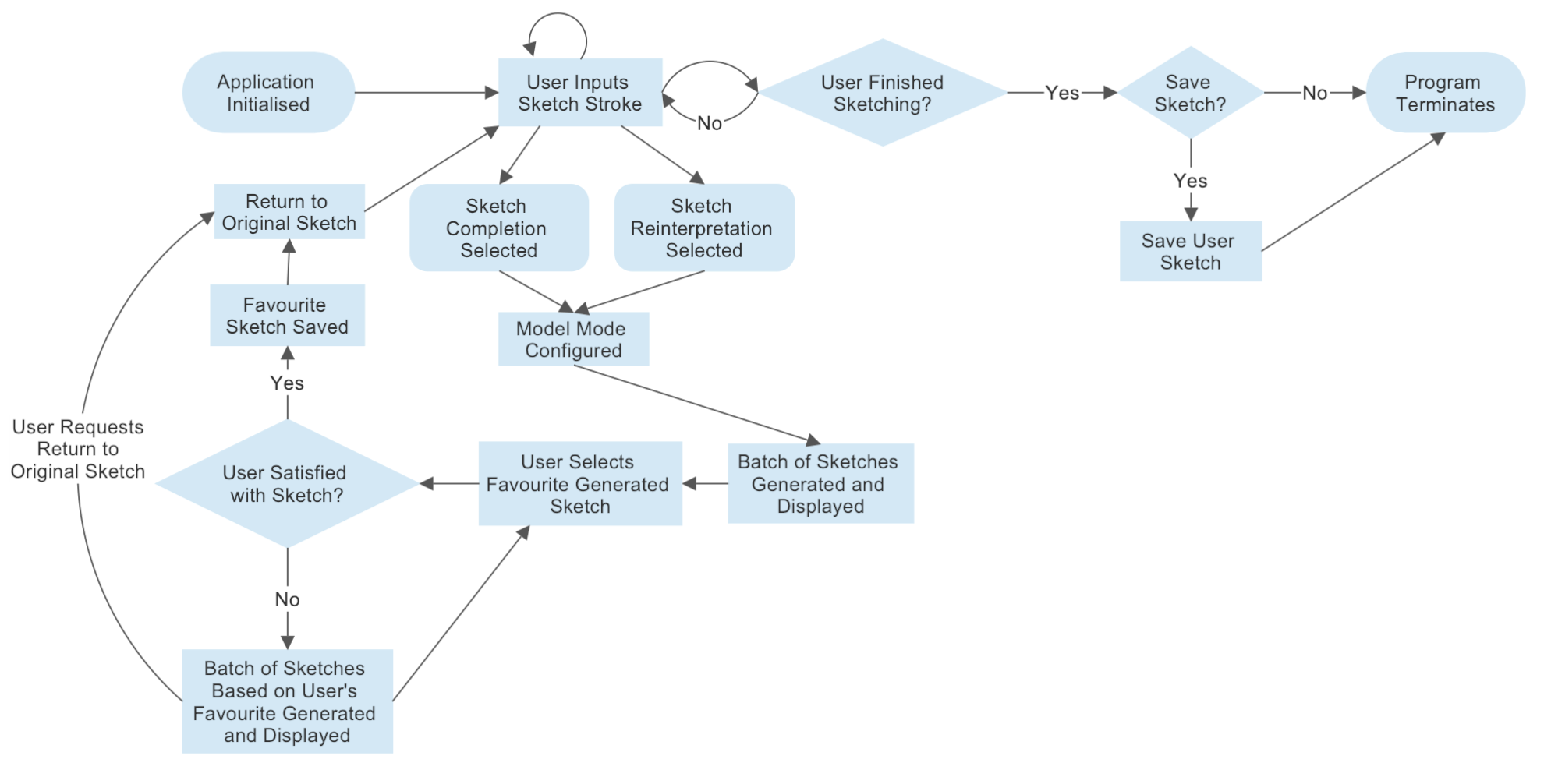

The idea behind this tool is that a user may input some strokes into the sketching window. They then may request that their sketch is either

re-interpreted or completed by a pre-trained model (in this case the model is trained on owls). The user is then shown a grid of the resulting

sketches, each sampled around a focal point in the model's latent space.

The user may then select their favourite sketch, according to which the focal point is moved such that newly generated sketches resemble their

favourite more closely. This process may be repeated until the user finds a satisfactory sketch, at which point the sketch may be saved as an

SVG file.

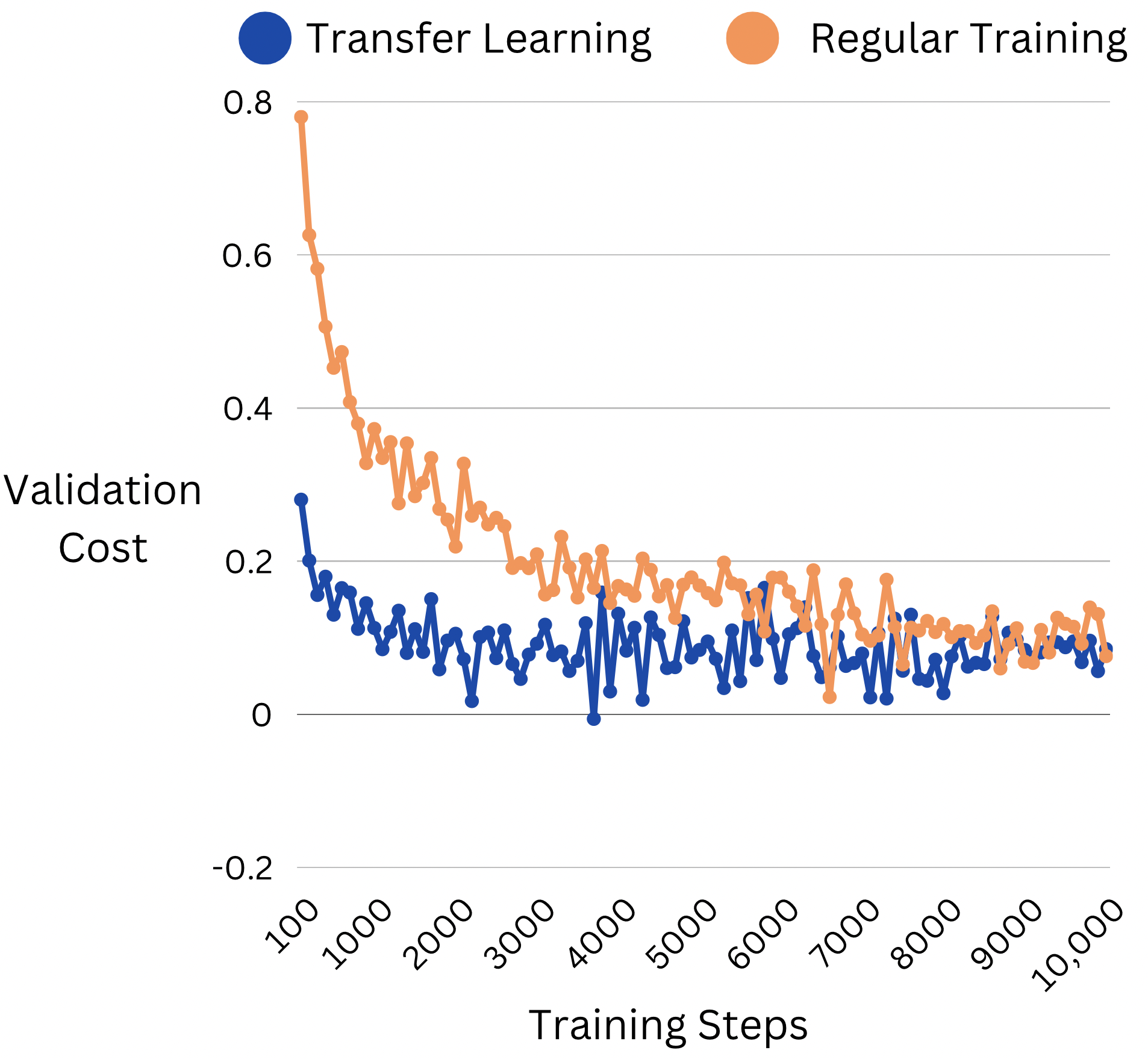

In addition, this project explored the viability of transfer learning within the Sketch-RNN framework by comparing training results on

pre-trained models to results on fresh models.